[LR Scheduler]warmup

论文Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour使用warmup进行学习率的调整,能够帮助模型的训练

摘要

Deep learning thrives with large neural networks and large datasets. However, larger networks and larger datasets result in longer training times that impede research and development progress. Distributed synchronous SGD offers a potential solution to this problem by dividing SGD minibatches over a pool of parallel workers. Yet to make this scheme efficient, the per-worker workload must be large, which implies nontrivial growth in the SGD minibatch size. In this paper, we empirically show that on the ImageNet dataset large minibatches cause optimization difficulties, but when these are addressed the trained networks exhibit good generalization. Specifically, we show no loss of accuracy when training with large minibatch sizes up to 8192 images. To achieve this result, we adopt a linear scaling rule for adjusting learning rates as a function of minibatch size and develop a new warmup scheme that overcomes optimization challenges early in training. With these simple techniques, our Caffe2-based system trains ResNet50 with a minibatch size of 8192 on 256 GPUs in one hour, while matching small minibatch accuracy. Using commodity hardware, our implementation achieves ∼90% scaling efficiency when moving from 8 to 256 GPUs. This system enables us to train visual recognition models on internetscale data with high efficiency.

深度学习在大型神经网络和大型数据集的支持下蓬勃发展。然而,更大的网络和更大的数据集导致更长的训练时间,这阻碍了研发进展。分布式同步SGD通过将SGD小批次划分到一个并行工作池中,为这个问题提供了一个潜在的解决方案。然而,要使这一方案有效,每个处理器的工作量必须很大,这意味着SGD小批次规模的显著增长。在本文中,我们根据经验表明,在ImageNet数据集上,大型小批次会导致优化困难,但当这些问题得到解决时,经过训练的网络会表现出良好的泛化能力。具体来说,当使用大尺寸小批次进行训练时,我们显示的精确度不会降低,最高可达8192幅图像。为了实现这一结果,我们采用线性缩放规则来调整学习速率作为迷你批次大小的函数,并开发了一种新的预热方案来克服训练早期的优化挑战。通过这些简单的技术,在保证训练精度的同时,我们基于Caffe2的系统能够一个小时内在256个GPU上用单次8192个的小批量训练ResNet50。使用商用硬件,我们的实现在从8个GPU切换到256个GPU时实现了大约90%的扩展效率。该系统使我们能够在互联网规模的数据上高效地训练视觉识别模型

2.2 Warmup

As we discussed, for large minibatches (e.g., 8k) the linear scaling rule breaks down when the network is changing rapidly, which commonly occurs in early stages of training. We find that this issue can be alleviated by a properly designed warmup [16], namely, a strategy of using less aggressive learning rates at the start of training.

正如我们所讨论的,对于大型小批次(例如8k)训练,当网络快速变化时,线性缩放规则就会失效,这通常发生在训练的早期阶段。我们发现这个问题可以通过一个适当设计的热身[16]来缓解,也就是说,在训练开始时使用不太激进的学习率策略

Constant warmup. The warmup strategy presented in [16] uses a low constant learning rate for the first few epochs of training. As we will show in §5, we have found constant warmup particularly helpful for prototyping object detection and segmentation methods [9, 30, 25, 14] that fine-tune pre-trained layers together with newly initialized layers.

恒定预热。[16]提出的热身策略在训练的最初几个阶段使用了较低的恒定学习率。正如我们将在第5节中所展示的,我们已经发现持续的预热对于原型目标检测和分割方法[9,30,25,14]特别有帮助,该方法将预训练层与新初始化的层一起微调

In our ImageNet experiments with a large minibatch of size \(kn\), we have tried to train with the low learning rate of \(η\) for the first 5 epochs and then return to the target learning rate of \(\hat{η} = kη\). However, given a large \(k\), we find that this constant warmup is not sufficient to solve the optimization problem, and a transition out of the low learning rate warmup phase can cause the training error to spike. This leads us to propose the following gradual warmup.

在ImageNet训练中,我们使用了一个批量为\(kn\)的训练,尝试在前5个周期内以大小为\(η\)的低学习率进行训练,然后返回到目标学习率\(\hat{η} = kη\)。然而,给定一个大的\(k\),我们发现这种恒定预热不足以解决优化问题,并且从低学习速率预热阶段的过渡会导致训练误差激增。这导致我们提议接下来的逐步热身

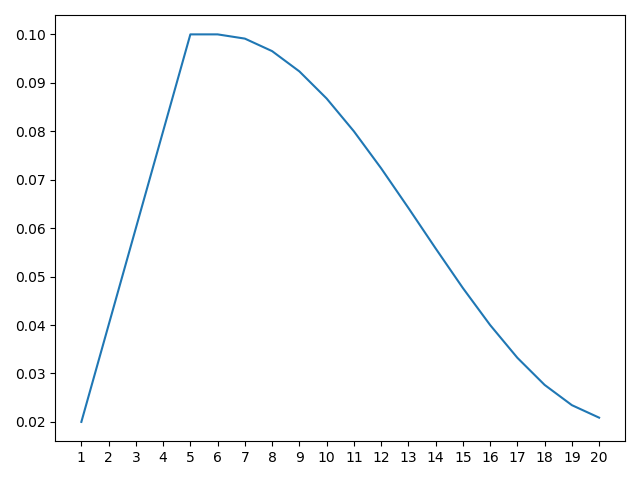

Gradual warmup. We present an alternative warmup that gradually ramps up the learning rate from a small to a large value. This ramp avoids a sudden increase from a small learning rate to a large one, allowing healthy convergence at the start of training. In practice, with a large minibatch of size \(kn\), we start from a learning rate of \(η\) and increment it by a constant amount at each iteration such that it reaches \(\hat{η} = kη\) after 5 epochs. After the warmup phase, we go back to the original learning rate schedule.

逐步热身。我们提出了一个替代的热身方法,逐渐将学习速度从小值提高到大值。这个斜坡避免了从一个小的学习率到一个大的学习率的突然增加,允许在训练开始时健康的收敛。在实践中,对于大小为\(kn\)的大型小批量训练,我们从\(η\)的学习速率开始,并在每次迭代中以恒定的量递增,使得它在5个时期后达到$ = kη$的值。热身阶段结束后,我们回到原先的学习策略

优点

参考:

神经网络中 warmup 策略为什么有效;有什么理论解释么?

What does “learning rate warm-up” mean?

What happened to differential learning rates and warmup?

warmup具有以下优点:

- 有助于减缓模型在初始阶段对

mini-batch的提前过拟合现象,保持分布的平稳 - 有助于保持模型深层的稳定性

PyTorch实现

仓库ildoonet/pytorch-gradual-warmup-lr自定义了Warmup学习器,并且得到了PyTorch的官方推荐 - PyTorch Best Practices @PyTorchPractice

使用过程中发现了一些Userwarning

1 | /home/zj/anaconda3/lib/python3.7/site-packages/torch/optim/lr_scheduler.py:143: UserWarning: The epoch parameter in `scheduler.step()` was not necessary and is being deprecated where possible. Please use `scheduler.step()` to step the scheduler. During the deprecation, if epoch is different from None, the closed form is used instead of the new chainable form, where available. Please open an issue if you are unable to replicate your use case: https://github.com/pytorch/pytorch/issues/new/choose. |

fork该仓库进行了一些调整:zjZSTU/pytorch-gradual-warmup-lr

注意:默认情况下GradualWarmupScheduler会从lr=0开始,可以在最开始阶段先执行一次step()

1 |

|