[Gradio]自动生成交互式用户界面

Gradio是一个开源Python包,能够自动构建交互式用户界面,帮助工程师快速对外展示算法实现效果。

概述

只需要在接口函数中实现算法功能,Gradio就能够生成一个交互式WEB应用,快速展现算法实现效果。

安装

Gradio要求Python版本在3.8及以上,采用pip3安装即可:

1 | pip3 install gradio -i http://mirrors.aliyun.com/pypi/simple/ --trusted-host mirrors.aliyun.com |

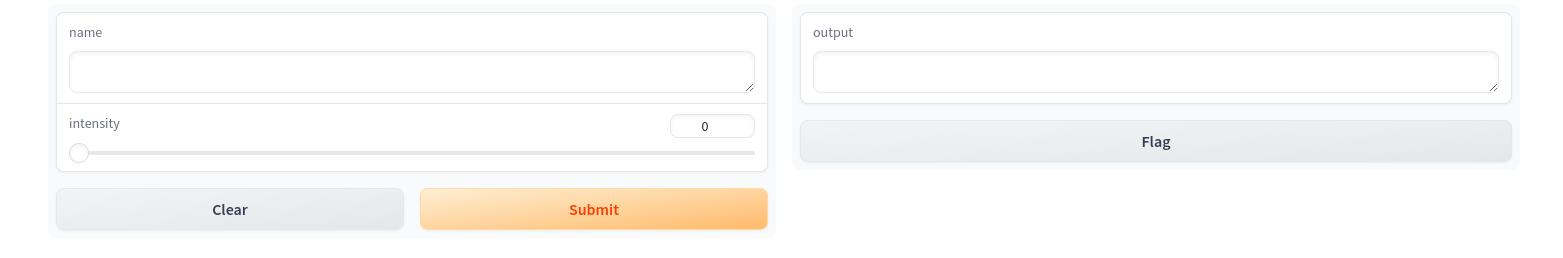

官方DEMO:打印文字

编写main .py,实现算法greet(name, intensity):能够打印文字。

1 | import gradio as gr |

执行该程序,本地监听的默认端口号是7860

1 | $ python3 main.py |

在线部署

Gradio Only

官方提供了快速在线部署的功能,设置启动函数launch()的参数share=True。

1 | # demo.launch() |

执行后Gradio会提供临时的公网访问链接,实际操作下来发现无法正常访问

1 | $ python3 main.py |

Gradio + Nginx

尝试在云服务器上搭建Gradio。首先在云服务器上启动Gradio程序,

注意一,需要设置服务器地址为0.0.0.0而不是localhost

注意二:可以指定Gradio在云服务器本地监听的端口号

注意三:设置参数root_path,和Nginx配置文件子路径保持一致

1 | demo.launch(server_name="0.0.0.0", server_port=7860, root_path="/gradio/demo/") |

启动程序后,就可以通过端口号7860进行远程访问

1 | python3 main.py |

结合Nginx进行反向代理,修改配置文件

1 | location /gradio/demo/ { # Change this if you'd like to server your Gradio app on a different path |

重新启动Nginx,就可以通过网址进行访问: https://blog.zjykzj.cn/gradio/demo/

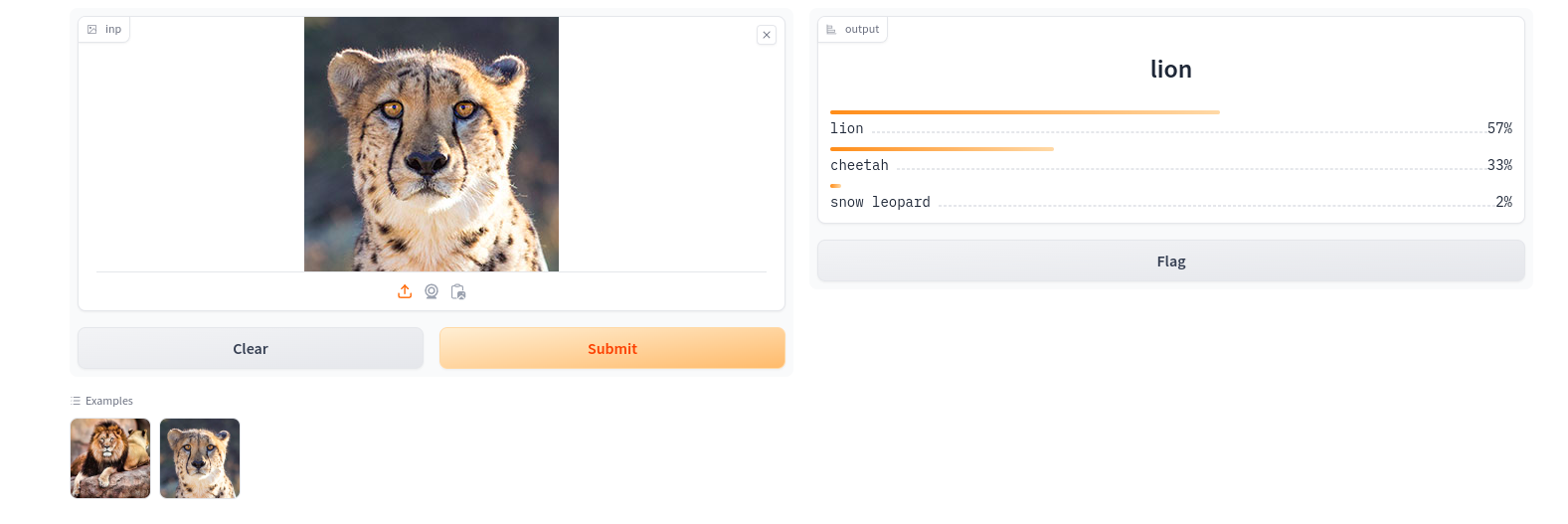

图像分类

Gradio针对图像分类定制了结果页面,结合标签值和分类概率进行展示。采用Gradio+Pytorch+Torchvision,实现代码如下:

1 | # -*- coding: utf-8 -*- |

修改成Gradio+ONNX+ONNXRuntime,部署到云服务器(https://blog.zjykzj.cn/gradio/classify/)。实现如下:

1 | # -*- coding: utf-8 -*- |

部署到云端服务器,修改Nginx配置文件,增加一个location

1 | location /gradio/classify/ { # Change this if you'd like to server your Gradio app on a different path |

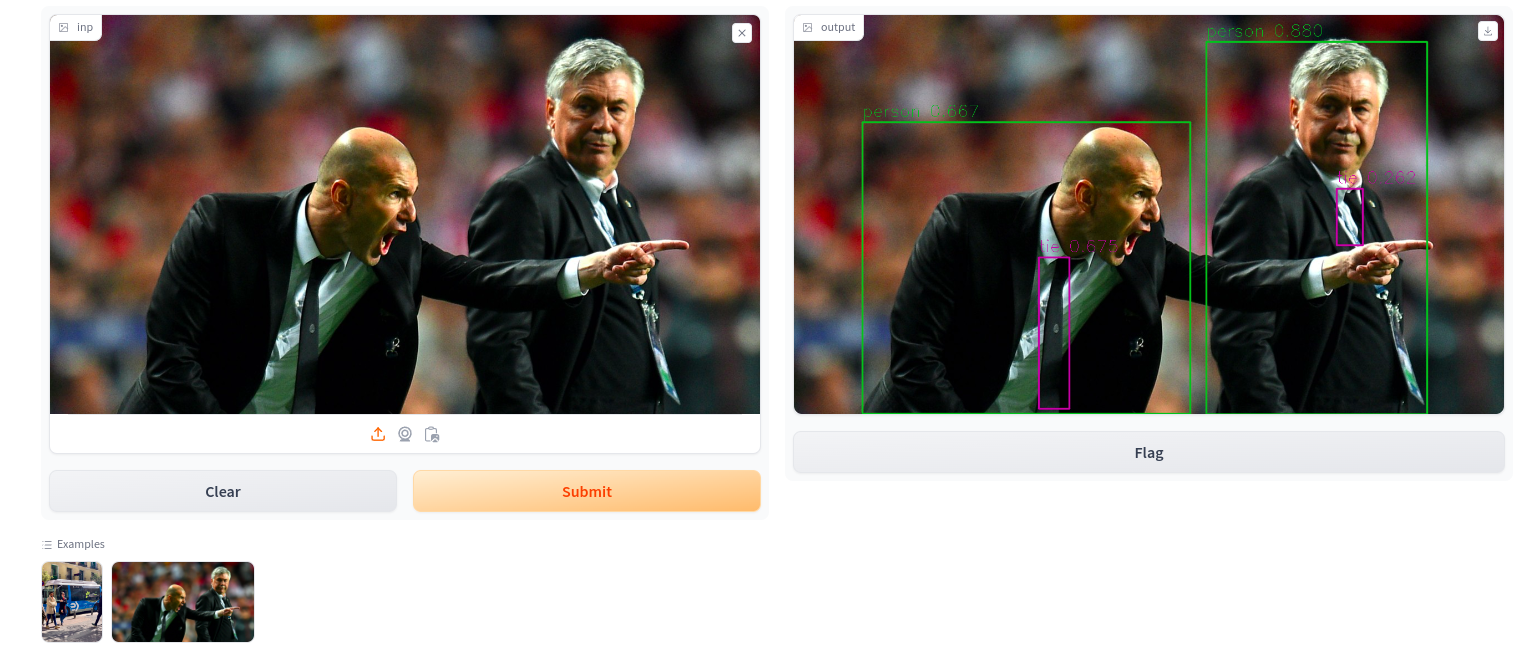

图像检测

使用Gradio进行图像检测算法展示,采用Gradio+Pytorch,实现代码如下:

1 | # -*- coding: utf-8 -*- |

修改成Gradio+ONNX+ONNXRuntime,部署到云服务器(https://blog.zjykzj.cn/gradio/detect/)。实现如下:

1 | # -*- coding: utf-8 -*- |

部署到云端服务器,修改Nginx配置文件,增加一个location

1 | location /gradio/detect/ { # Change this if you'd like to server your Gradio app on a different path |